A new standard recently approved by the Audio Engineering Society should guide the development of audio over Internet protocol for the professional and broadcast industries. Published on Sept. 11, 2013, AES67-2013 is the outcome of more than two years of work by a large group of participating companies, some of them in the broadcast industry, with an interest in IP audio.

We visited this topic in the Oct. 17, 2012, issue of Engineering Extra with an extended interview with Kevin Gross, leader of the AES-X192 standards development committee that led to AES67. He said then that a new standard would allow audio equipment from various manufacturers to interoperate without requiring an external between-type translator. The hope is that, in turn, this will allow the IP audio industry to grow more quickly and effectively.

SOME IP BACKGROUND

Before going further, it might be helpful to talk a bit about the technologies involved and how we got to where we are today.

First and foremost, it is essential to recall that when it comes to all things Internet-related, there is a standards body, known as the IETF or Internet Engineering Task Force. This somewhat amorphous and independent group is the source of what are known as “Request for Comments” documents, or RFCs. The IETF operates under an organization known as the Internet Society, having morphed from what was initially a United States government agency into an independent organization.

The IETF defines the standards that are used in IP by issuing RFCs on various topics. Most of us know that the Internet is a system of data communications developed by a government agency, DARPA, whose initial purpose was to be self-healing in case of war or civil disruption. It relies on packets of data with a simple address scheme that allows packets to be routed to their destination based on changing network conditions.

As the implications of the Internet’s power and simplicity became more understood, and network speeds began to grow, network engineers began to think of ways to use it for more than just transferring data files comprised of text. To ensure these other forms of transmissions would be compatible, sets of standards were developed to cover as many types of communications as could be imagined. These sets of standards were defined and refined by issuance of RFCs to cover every topic.

For example, the idea of transporting audio and video by Internet as a real-time process was developed in 1996 under RFC 1889, which was then superseded by RFC 3550 in 2003.

It is important to distinguish audio and video as “real-time” processes, as opposed to a file transfer that can be played back after a complete file has been received.

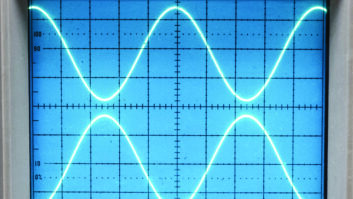

To be useful in a broadcast system, audio must mimic real time — the delay between when audio leaves a source and ends up in our ears via a set of speakers must be small enough to be unnoticeable. However, due to the need to break up an audio signal into packets, encapsulate them, transport them, re-order them in time and then reassemble the original signal, by definition transporting audio by IP can’t be a real-time process. The difference between real time and the actual delivery time is a delay known as latency.

For an audio standard to be called high performance, it is essential to standardize on a maximum latency for audio transport that meets the goal of being unnoticeable. This is a special case of data transmission that requires its own set of rules and procedures.

Likewise, a certain packet size and definition of address header content must be maintained to optimize audio performance.

These definitions and concepts are encompassed in the development of a pair of standards known as Real-time Transport Protocol and Real-time Transmission Control Protocol, or RTP and RTCP. These standards are at the heart of Internet audio, from high-speed LAN based systems to streaming audio over the public Internet.

RAPID ACCEPTANCE

In the United States, the radio broadcasting industry has already shown itself ready, if not advanced, in the move to embrace networked audio solutions.

The initial driver was the development of cheap and accessible switches designed for computer networks that could transport more than enough bandwidth to handle digital audio successfully over inexpensive Category 5 wiring. Early systems, known as Layer 2 type, used the Ethernet protocol to connect between machines using what is known as the MAC address, a unique device identifier.

This was an interesting and valuable transport, but it did not connect audio across anything but a flat switch environment. Networked audio requires the use of IP addresses. As Layer 3 switches capable of decoding packet IP addresses came to market and dropped in cost, manufacturers moved to implement true audio networks.

Wheatstone and Axia are among those in the United States that have developed and marketed IP audio systems. In Europe, a number of companies have come up with similar systems, such as Ravenna from ALC NetworX. While all these systems feature audio that can be transported over networks using standard protocols defined as above, the fact that they were developed independently results in the unsurprising outcome they don’t necessarily work with each other.

AES67 attempts to resolve that problem by defining a standard approach to using these already standardized protocols. Essentially, it defines a way of using the standard protocols, such as RTP, in a manner that is the same for everyone.

The great benefit to the audio community of developing an IP audio standard is that it opens up a consistent method of routing high-fidelity audio signals over any and all IP networks. Previous efforts resulted in proprietary methods that would work within the confines of a high speed LAN, possibly with custom configurations in network switches, or even custom featured network switches as proposed by AVB. AES67 allows what have been, up until now, islands of audio networking to connect directly to the public Internet and in turn transport packets into what had been foreign networks.

DOES IT END HERE?

So is this the final word on IP audio standardization?

Phil Owens, who handles eastern U.S. sales at Wheatstone Corp., said, “Definitely not.” He describes AES67 as “a basic framework that allows manufacturers to use the same base parameters needed to send and receive multicast audio over IP between their I/O devices.”

Andreas Hildebrand of ALC NetworX agrees: “It is neither intended to be a complete solution on its own nor will it replace existing solutions. … As such, the standard defines a minimum set of protocols and formats which need to be supported by other solutions in order to establish synchronization and stream interchange.”

Both made the point there is much more to a professional IP audio system than just the transport standards.

“Without the elements of discovery or control … AES67 only provides raw audio transport,” Owens said. This means that how an individual manufacturer might implement start/stop functions on a console or studio tally lights is still likely to remain proprietary.

Hildebrand adds, “Existing solutions usually offer functionality or performance characteristics which go beyond AES67 (e.g. advertisement and discovery, device control, lower latency, extended payload formats, higher sampling rates etc.); consequently AES67 intends not replace these solutions, but requires manufacturers or solution providers to enhance their capabilities to support AES67 guidelines.”

STILL TO GO

Owens had this to say about the possibility of future standards efforts: “Proprietary systems will always have a functional advantage — devices that are designed from the ground up to work together as a system will do just that with no translation required. This includes interfacing with third-party devices using the standards-based manufacturers’ protocols currently available. We do expect, though, that there will be future specs developed that can be used in conjunction with AES67.

“AES64 is an example. This spec proposes to standardize control elements by assigning every possible control a number, much the same as MIDI controllers work in the world of musical instruments. For example, a sustain pedal ON message #64 is understood by all synth manufacturers. Over time, AES67 and other associated specs may provide all the functions needed for true interoperability.”

CONCLUSIONS

We live in a world that is moving quickly toward integrating new products and services across networks that span all pre-existing technologies. It’s an exciting time for audio. In 10 years, it is likely that virtually all new equipment will be networkable and capable of greater performance precisely for this reason. Professional audio is moving in this direction fast. The wise engineer will want to learn as much as possible about networking and IP standards such as RTP in order to be able to keep up.

Thanks to Phil Owens (www.wheatstone.com) and Andreas Hildebrand (www.ravenna.alcnetworx.com) for their assistance.

Get a copy of AES67-2013 here.