Digital Audio Basics

Apr 1, 2008 12:00 PM, By Glen Kropuenske

The future of audio is digital and the quality produced by digital audio is of great importance to broadcasters, producers, live venues and the listening audience. Digital audio can be prone to mysteriously disappearing or producing annoying pops or clicks. With the increasing amount and complexity of digital audio equipment, video multiplexing/de-multiplexing, complex routing paths and cable defects/influences, problems can develop with the digital audio signal. Having a test and measurement tool to diagnose these problems becomes essential in producing high quality digital audio.

Digital sampling to binary bits

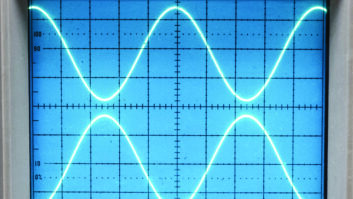

The analog audio signal is converted to digital using a sampling process involving a digital-to-analog converter (D/A). To convert an analog audio signal from continuous changes vs. time to discrete digital values vs. time, the level of the signal is measured at certain intervals in time. This process is known as digital sampling. Sampling is like taking snapshots of the signal level as it increases and decreases over time. The sampled voltage levels are converted to digital binary values. The captured sequential digital binary value samples represent the audio levels as the audio varies in time.

Fig. 1. Sampling an analog waveform at a specific rate.

Click image to enlarge

Sampling happens at equally separated intervals. The number of intervals in which the values of the signal level are captured (snapshots) in one second is the sampling rate (see Fig. 1). The sampling rate frequency is the reciprocal of a sampling interval (time). The measurement unit of the sampling rate is the hertz (Hz) or number of samples per second.

The conversion process samples voltage levels of the changing audio at a much faster rate than the audio changes frequency. The Nyquist Theorem states the sampling rate must be equal or greater than two times the highest audio frequency component. Therefore, to recreate audio at 20kHz, the minimum sampling rate must be higher than 40kHz.

Quantization

Fixing a digital value to the audio level at each sample interval is called quantization. The amplitude range of the audio waveform is divided into level steps. For example, there are 16 discrete binary values to specify the amplitude or level using a 4-bit binary system. The 16 values are divided in half (almost), with seven binary values to indicate positive voltage levels and eight values to indicate negative voltage levels.

Fig. 2. Assigning amplitude values to a sampled waveform.

Click image to enlarge

The 4-bit quantization provides an example of how audio levels can be converted to digital 4-bit words representing the audio signal. However, the binary range is not symmetrical for positive and negative values and it has insufficient values for adequate low audio level reproduction. It should be noted that with digital quantization, the audio level must not exceed the quantization range or largest digital code word (1111), or digital clipping occurs. Fig. 2 shows the quantization levels on a sampled waveform.

A quantized binary value may be encoded in a form adapted to the sampled signal and system requirements to provide overall system improvements. The most-used and adopted coding system is the Pulse Code Modulation (PCM) encoding. PCM linearly quantifies all quantizing intervals by means of a fixed scale over the signal amplitude range. PCM makes use of a two’s complement system to distinguish positive and negative binary coded values (see Fig. 3). The analog-to-digital (A/D) conversion resolution accuracy is determined by the number of quantizing levels possible. The bit word length determines the number of quantizing level steps or amplitudes (resolution) that can be achieved. For example, an 18-bit digital code word provides 262,144 increments for coding analog signal amplitudes.

Fig. 3. The two”s complement numbering system is used to differentiate positive and negative binary encoded values.

Click image to enlarge

The number of bits used to form the PCM digital words (bytes) used to represent each of the sampled audio levels can vary, but typically range from 8 to 24 bits. The more bits used to represent the amplitude, the greater the dynamic range that can be represented. Each bit provides approximately 6dB of range. An 8-bit digital audio signal has a 48dB dynamic range (quiet to loud audio range) while 16-bit digital audio provides 96dB of dynamic range. A 24-bit digital audio word length provides 144dB of dynamic range.

PCM digital audio is often sampled at 44.1kHz or 48kHz, although other rates are possible. Sampling rates up to 192kHz can easily be found in some equipment.

In a digital audio system, the maximum audio level corresponds to 0dBFS (dB full scale) which is assigned the largest digital code word. Manufacturers have adopted the familiar 0VU level equal to +8dBm as a standard operating level (SOL). This level corresponds to -20dBFS, in which the digital values are well below the largest digital code word value. This provides 20dB of range for audio peaks to go above +8dBm before digital clipping occurs

Digital Audio Basics

Apr 1, 2008 12:00 PM, By Glen Kropuenske

Putting the bits together

To retain the audio as a digital signal, each digital sample word or quantized value is sequentially output and moved along the transmission line or cable. This produces a stream of digital bits, word by word or sample by sample. It is important to realize that for each sample interval an 8-bit to 24-bit digital word length is created. These are serially assembled and output from a A/D converter. In reality, the number of bits per second (b/s) is equal to the sample frequency, multiplied by the digital word length.

The amount of data created by digital audio is quite large. Sixteen bits per sample at 44.1kHz creates 705,600 bits per second. To convert bits to bytes, divide by eight (8 bits =1 byte). A 24-bit digital audio sample with a 96kHz sample rate produces a bit-rate of 2,304,000 bits per second. If two-channel audio is produced and assembled together onto a common transmission line, the data rate doubles.

Without modification, a PCM digital audio stream can be difficult to receive reliably. If all the bits are set to ones or zeros for a period of time, the signal is essentially a dc voltage. A dc signal cannot be passed reliably by some circuits, and a prolonged dc level causes a shift or offset on the digital transmission line. A lack of digital transitions on the line would also make locking a receive clock or recognizing sync transitions difficult for the receiver.

To resolve this potential problem, the PCM digital data is encoded using another scheme called bi-phase mark coding (BPM). Bi-phase coding ensures that a dc shift doesn’t occur on the line, by maintaining a dc balance. A dc balance means the on-time (highs) must equal the off-time (lows) resulting in a mean or average of zero volts. Bi-phase coding ensures balance by producing continuous and balanced transitions on the data line.

Fig. 4. Bi-phase coding ensures balance by producing continuous and balanced transitions on the data line.

With bi-phase coding, each bit as a time slot begins with a transition and ends with a transition. If the data bit is a 1, a transition occurs in the middle of the time slot, in addition to the transitions at the beginning and end of the time slot. A data 0 has only the transitions at the beginning and end of the time slot and does not have a transition in the middle. This insures a transition and voltage balance no matter if there is a logic string of zeros or ones. Fig. 4 illustrates this. With regular transitions, the signal is a balanced ac signal in which a receiver can easily recover the clock rate.

Note that with bi-phase coding, the clock frequency is two times the audio data bit rate. Every audio bit is represented as two logical states when bi-phase coded. Each audio bit is divided into two time intervals or cells per data bit.

Blocking it together

The audio data words are assembled and transmitted serially. Some form of organization is needed so the receiver can reassemble and identify the assorted bits of information in the data stream. Organization involves assembling the data into blocks. Each block consists of 192 frames of audio. Each of the 192 frames can be divided into two sub-frames for two-channel audio. Each frame is produced at the digital audio sampling rate. In a 48kHz audio sampling rate, each frame is 20.833�s with each frame lasting 4ms.

Fig. 5. The AES-3 data structure.

Each frame can carry two audio channels. In a two-channel mode, the samples from both channels are transmitted in consecutive sub-frames. Channel 1 is in sub-frame A and channel 2 is in sub-frame B. In stereo mode, the interface is used to transmit stereo audio with both channels simultaneously sampled. The left audio is in the A channel sub-frame and the right audio is contained in the B sub-frame. Fig. 5 shows the AES-3 data structure.

Fig. 6. The structure of the data sub-frames

In addition to the digital audio word data bits, each sub-frame contains additional data. Each sub-frame consists of 32 bits, which includes 20 or 24 bits of audio word data bits and 8 bits of additional data. Each sub-frame includes bits for preamble or sync data, auxiliary data, audio data word bits, validity (V), user (U), Channel status (C) and Parity (P) data bits. Fig. 6 shows the sub-frame structure.

Considering that each sub-frame consists of 32 � 2-bit, occurring in 20.833�s (FS = 48kHz), the bit-rate increases to 1,536,024 � 2 = 3,072,048b/s.

For each sample, two 32-bit words are transmitted, which results in a bit-rate of 2.8224Mb/s at 44.1kHz sampling rate or 3.072 Mb/s at 48kHz sampling rate.

Preamble or sync bits

Fig. 7. Detail of the sub-frame preamble

The first four bits of each sub-frame consists of four preamble bits. The preamble bits may be called sync words, as they identify the start of a new audio block and each sub-frame. A Z sync bit arrangement marks the start of the first frame in the 192-frame block. The sync word Y indicates the start of every B sub-frame. The sync word X indicates the start of all remaining frames. The bit patterns are shown in Fig. 7.

The preamble has a distinctive data pattern that actually is not in compliance with the bi-phase coding rules. The first bi-phase coding violation occurs during the initial portion of the preamble marking the start of a frame. The initial portion lacks a normal bi-phase transition. The remaining preamble transitions identify the word type as Z, Y or X. This purposeful violation of the bi-phase coding rule allows a digital audio receiver to identify that start of the audio blocks and sub-frames. Table 1 shows the details of the preamble.

It should be noted that the bi-phase rule breaking is by design and its effects do not cause any problems. Also by design, the bi-phase coding may cause each bit of the preamble or each of the 32-bit in the sub-frame to be opposite in phase.

The four bits following the preamble may be used as part of the main digital audio word or can be used for an additional audio signal known as auxiliary audio data. The use of auxiliary audio is rare. However, one application is for voice control communication. If the auxiliary bits are used for a special application, the following audio data word length is limited to 20 bits. The auxiliary data bits, if not used for a special application use, may be used to add bits to the audio data word length, extending the word length to 24 bits. Note the location of the auxiliary data bits in Fig. 6.

Digital Audio Basics

Apr 1, 2008 12:00 PM, By Glen Kropuenske

Audio sample word bits

The audio data word contains the digital audio bits defining the quantization values. The sequencing of values through the 192 sub-frames in each block is the digital audio itself. After one frame ends, another begins in the serial steam of digital audio bits.

The data word length can be 8 to 24 bits. If the auxiliary bits are defined for use for the digital audio data word, a 24-bit word length for maximum resolution and dynamic range is available. If the auxiliary bits are specified for special use, the maximum word length is 20 bits.

The audio data word is put into the sub-frame with the LSB (least significant bit) at the left while the MSB (most significant bit) is at the right in the pictured data stream. While this may seem backward as illustrated on paper, as we are programmed to read from left to right, the data is clocked out of the D/A converter in the order of LSB to MSB, which is the order of the digital bit stream vs. time.

The validity bit (V) is set to zero if the audio sample word data is correct and suitable for D/A conversion. The validity bit was intended to signal the receiver to qualify it as being suitable for conversion. Several audio standards specify this bit to be set (to 1) when carrying data-compressed digital audio that cannot be converted to audio with a linear PCM receiver. This would cause the receiver to mute, preventing a burst of high-level noise output before the channel status data can be read and interpreted to indicate the digital signal is not PCM audio.

There has been some variation or confusion to the use of the validity bit. Certainly when the audio word data is modified with compression schemes, the validity bit must be set. However, some applications have set the validity bit if an error was found and concealed. As a result, some manufacturers have chosen to have the receiver not generate or verify the sample word validity.

The user bit (U) in each sub-frame occurs after the validity bit (V) and prior to the channel status bit (C). The user bits accumulated from each of the 192 sub-frames for that channel provide specific user information. The information is typically application-specific information regarding the program related information or instructions for preservation of the data.

The channel status bit (C) occurs after the user bit (U) and prior to the parity bit (P) in each subframe. The channel status bits accumulated from each of the 192 frames provides information regarding the audio in each channel. Since the information is usually identical in each channel, a receiver may elect to read only one of the channel’s status bits.

Interpretation of the 192 channel status bits can best be understood by grouping the recovered bits from each sub-frame sequentially into 8-bit words (bytes), accumulating into 24 rows or bytes. In this manner, information regarding the channel’s audio is indicated to the receiver.

The parity bit (P) is used to keep the data bits in the sub-frame (bits 4 to 31) at even parity. Even parity ensures that the total number of ones in the sub-frame data is always an even number. The parity bit permits the detection of an odd number of bits or bit transitions (bi-phase coding ones) indicating a bit error.

Even parity means that there is always an even number of mid-bit transitions (ones) in the data area of the sub-frame. Consequently, under normal bi-phase coding, the polarity of the first bit in every preamble or sync word is the same polarity transition. Also the second half transition of the parity bit is also the same as the previous sub-frame. This alone can be used to check for an error in the sub-frame data, ignoring the parity bit. In many cases this is more accurate, as two missed transitions would still result in even parity, and the error would be overlooked if only the parity bit were being used as an error indicator.

Kropuenske is an application engineer at Sencore.

Z

11101000

00010111

X

11100010

00011101

Y

11100100

00011011