The author is sales and marketing manager at 2wcom.

The way people consume media content is changing significantly. Like the expansion of IP, this greatly influences the convergence of media production and distribution units for radio, video and the internet.

Broadcasters therefore have the chance to adapt to the transitioning media-consumption behavior and use it to their advantage.

- Target group orientation: Keep in mind that besides the attractive younger audience, it‘s crucial to offer each target group the content they want, whether mobile or stationary.

- Cost and time efficiency: By treating a contribution as a cross-media project, the video, radio and internet units are no longer separated.

- Expand the value chain and encourage interaction: Cross-media content production and distribution means all playout sources can refer to each other. This leads to significantly higher coverage. Moreover, by integration on social networks, the opportunities of interaction with the audience increase. Both aspects include the chance of deploying new marketing formats.

TECHNOLOGICAL ADVANCES

Results of these developments include international projects such as 3GPP and 5G-Xcast. The latter is designed to build a universal and multidirectional IP network for efficient large-scale media distribution via 5G-Xcast. The project focuses on the dynamic switching between unicast, multicast and broadcast and also enables spectrum-efficient distribution of program content to a large number of simultaneous users with only one stream.

End users can receive reliable live and linear high-quality content without burdening their mobile data contingent. With a view toward the future, 5G-Xcast will allow national and private broadcasters to launch new on-demand formats or offer interacting facilities, besides classical linear content.

The transformation to cross-media production can only be achieved with a solid technical foundation. It’s important to carefully consider which approach best meets system requirements and the associated applications. For example, the best-of-breed approach is focused on using only those components per device that best meet the technical requirements. This method can be expensive and each eligible solution must be evaluated in regards to e.g. compatibility aspects or its compliance with the IT security guidelines.

Here are a few significant points focusing on interoperability and flexibility:

- Choose standards and protocols that optimally support respective use cases. The SMPTE ST 2110 standard is designed for cross-media production. In its structure, audio, video and ancillary data are separated, which allows for the ability of handling each one individually depending on the playout source. This is very practical, as SMPTE ST 2110 can receive all AES67 streams at a 48 kHz sample rate. It is interoperable with all common standards supporting pure audio/radio productions, such as Ravenna (distribution networks), Livewire+ (studio) or Dante (concert halls, conferences or studio). A specific application could be, for example, the audio description in a video, which helps blind and visually handicapped people aurally better follow the story. For this purpose, it’s sufficient to produce the audio content with one of the previously mentioned standards. Due to the structure of SMPTE ST 2110, the respective audio can be added to the stream, already including the video signal and ancillary data. Besides the flexible handling of different stream sources, an uncomplicated negotiation and management of all connections is possible for unicast and multicast streams because all relevant protocols are supported (SIP for unicast and RTSP/SDP for multicast). Unicast, for instance, makes sense in case of permanent point-to-point connections between studios. In order to be able to deal with advertising and discovery, connection management and network control in environments based on SMPTE ST 2110 and AES67, the Advanced Media Workflow Association (AMWA) has published the NMOS standard. Recently launched, this standard is constantly being refined based on technical real live requirements.

- Compatibility of audio algorithms can’t be taken for granted. Especially AAC profiles and Opus are implemented in different ways, which leads to incompatibility in regards of frame sizes. It is therefore important to put every manufacturer through its paces to ensure all possible variants of an audio algorithm are supported.

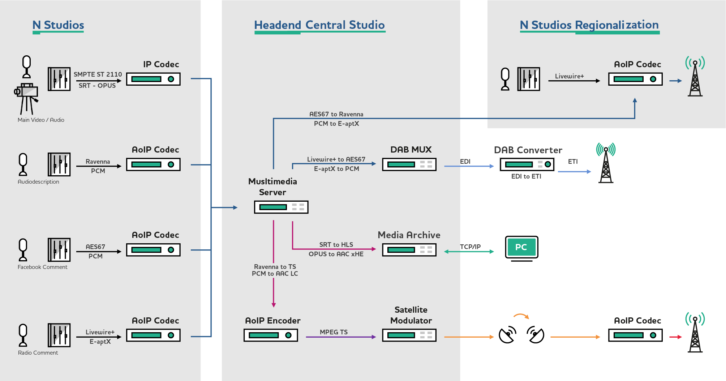

- Conversion of audio formats, protocols and standards according to the use case. That is, considered in terms of bandwidth economy, it makes sense to convert an audio stream for publication on a website from the high-quality PCM format to the compressed AAC xHE format. Or, to provide a stream not only for audio over IP networks but also for DAB+, it must be possible to transform from Ravenna standard to AES67.

- An intelligent content management of streams is essential, amongst others because the number of audio streams for a production is significantly higher compared to the number of video streams. It enables the combination of the appropriate elementary streams into logical groups. For example, Facebook comments mostly differ, so the “normal audio comment stream” is replaced with the “Facebook comment stream.” Hence, for further processing, you can select the relevant ones.

- Virtualization counts on scalability and maintenance. Most broadcasters want to be able to expand their networks as easy as possible, add new services with just a mouse click or mirror the configuration of one device to another. Scalability can be notably improved by using virtualization strategies. The possibilities that have been introduced by Docker or VMware to copy instances, take snapshots or run them across multiple hardware devices is a great improvement for scaling and maintaining networks.

- That has also a major impact on needed rack space. Thanks to virtualization, applications can share the same hardware or even run as a swarm across multiple hardware units with different hardware configurations. That reduces the number of devices needed, because server hardware has, in most cases, a lot more processing power than the specialized hardware of codec manufacturers. Thanks to AES67 and other audio over IP standards the requirements for real hardware interfaces are slowly disappearing and that is opening the door for virtualized solutions that are depending on an all-IP infrastructure. With high bandwidth and robust IP lines audio processing in the cloud becomes possible. In consequence manufacturers have to pick up the pace and offer their solutions as virtualized software.

ENSURING TRANSMISSION ROBUSTNESS

There are various ways to ensure transmission stability. By standard software feature and/or protocol the SMPTE ST 2202-7 standard ensures dual-streaming of a generated IP stream. In the case of packet losses of the first stream, the respective packets can be reconstructed from the second stream. Another method is to transmit the stream in up to four different audio qualities.

In case of failure, the decoder switches to the next quality available. If unicast streams are sufficient for certain scenarios, SRT [Secure Reliable Transport] can also be used. SRT was originally designed for video, but supports audio perfectly, too. It offers a much better protection against packet loss than other FECs with low latency. Moreover, SRT offers encryption of the content.

By device, this requires a logical concept that defines exactly how encoders and decoders should be cross-connected and when to switch to the backup device. In principle, this structure is similarly adaptable for all parts of a system.

In addition, hybrid distribution comprises not only an alternative source as a backup but also to increase coverage by reaching regions still lacking of IP or certain end customer devices like DAB radios. The possibility of using several sources in parallel means the produced content can be distributed via IP, FM, satellite or DAB.

By bandwidth and stream management, it is obvious redundancy by software does not come with low bandwidth and especially video streams are real bandwidth drivers. By using a SDN [software-defined network] controller the optimal path through the network is chosen for the traffic. In addition, an orchestrator handles the high number of streams.

Finally, synchronization in IP networks, and particularly audio syncs to the video, can be achieved by PTPv2. With parallel hybrid distribution via satellite this takes place via GPS using the 1pps signal.