The author is Chief Technology Officer of 2wcom. This article originally appeared in the ebook “What’s Next for Virtualization?”

Virtualizing software, especially using containers, makes it much easier to run the software on standard server hardware instead of dedicated broadcast devices.

It is a very good exercise to build platform independent software. It definitely was an exercise for us at 2wcom when we migrated our embedded software that was designed for a four-channel audio over IP codec hardware (IP-4c).

But after that was achieved, it helped us to realize a project where approximately 400 height units of equipment could be reduced to just six rack spaces of servers — including redundancy!

As we are diving deeper into the virtual rabbit hole named Kubernetes, it becomes clearer that virtualization was just the beginning.

Why do broadcasters need Kubernetes?

Kubernetes — “K8s” for short — is an open-source platform to manage containers, services, and workloads across multiple physical machines. It is the state-of-the-art platform to manage containers and is used by Netflix, Google, Spotify and many more.

But why do we need this in our broadcast world? — Because it helps a lot to fulfil some of our daily requirements: reliability, scalability, updates and monitoring.

Reliability

Kubernetes is self-healing! This is a major advantage over traditional systems where just backups and redundancy are defined.

Using K8s it is possible to evade entire machines in disaster scenarios. If for example one of your servers is crashing or has a disk pressure condition, the other servers (also known as worker nodes) can take over the service for the machine that is failing.

Even though this process might not be seamless, it is self-healing because K8s tries to maintain the same number of services and containerized apps that you have defined.

Together with a sophisticated redundancy scheme, the broadcaster can achieve seamless switching and zero downtime even while replacing entire machines in the cluster.

Scalability

Let’s say your CPU load requirements for one of your apps increases, because you want to transcode an additional audio/video stream for monitoring purposes.

Without Kubernetes, the operator will likely have to install a new server and move some of the app instances from each running computer to this new server. This frees up resources on all machines, enabling the additional monitoring stream. Managing that process can be a high workload and requires extensive planning.

With Kubernetes this is as simple as installing a new server and letting it join the cluster with just one simple command:

kubeadm join [api-server-endpoint]

After that the operator just needs to push the new configuration and its resource requirements into the cluster (in Kubernetes called limits and requests).

Updates

Everybody working in IT knows that updates can be time-consuming and the cause of a lot of troubles. Kubernetes really helps to deploy software updates because it lets you define strategies to do that.

One strategy could be to update 25% of your containerized apps at the same time and roll that update through the cluster. This gives the user time to react and roll back the update in problematic situations.

Additionally, the update can therefore maintain seamless redundancy with no manual switching required. The maximum “surge” that defines how many of your app instances are updated at the same time can be defined by an admin who is deploying the update.

Another update strategy could be to push a new version into your cluster and let the individuals who are controlling and using your software decide when to apply the new version. In our case this was a very nice feature.

An administrator can push the new software version into the cluster whenever it is approved. The operator who configures only his audio streams can simply reboot an instance at any time when it is suitable. The reboot will automatically apply the updated version while keeping the same config.

Monitoring

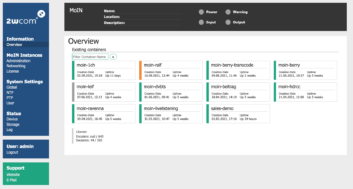

Operating a huge cluster instead of hundreds of individual hardware boxes can be fearsome. It all relies on a couple of machines instead of hundreds. But Kubernetes can seriously increase the speed of a root-cause analysis and fixing of a bug instead of making it more complex as one might think.

A great advantage is that standardized mechanisms can be used to obtain logs from different parts of the software. These logs can even be used by an indexing search engine (for example Elasticsearch), which lets you search and correlate the log files many times faster. Therefore, one can find common failures across multiple instances easier.

Let’s say you need to find a reason why SNMP connections break down. In that case you could search through all log files of all software parts for an entry of “snmp”. The result will quickly show you the number of found entries and you can explore the relationship and chronological sequence of the errors.

Setting up such systems is time-consuming, but with a Kubernetes installation the vendor can also provide the monitoring stack, like we do it at 2wcom. We are providing Elesticsearch, Kibana and Grafana as a very sophisticated monitoring stack that integrates well with our software.

Conclusion

Although the shift towards virtualization can be scary because it is such a different environment than physical devices, it provides some valuable improvements and streamlined processes to operate a high-quality broadcast system.

The streamlined processes provided by Kubernetes reduce the maintenance overhead of a broadcast system, which leads to lower operational expenses or frees up resources to do what really matters: delivering high-quality broadcast content.