This is part of a continuing series of occasional articles about basic broadcast concepts for new LPFM broadcasters and others who may be unfamiliar with industry terminology and practices.

It’s not unusual for different songs from different sources to play at different audio volume levels. Even production within the same station can have very different levels when people aren’t watching the levels closely when recording material.

I was asked the following question: “Seems like the volume levels of some songs are different. It’s not drastic but it’s noticeable to me. I mainly notice it on the headphones. Am I imagining this? If not, is there a simple solution?”

That question isn’t about the loud parts versus quiet parts within one song, but rather from song to song. Often, subtle differences in source material are corrected by our audio processing. Differences between quiet passages and loud passages within songs are perfectly acceptable. That is referred to as the dynamics of a song.

However, hearing a difference in headphones when in program (as opposed to “air” or an off-air receiver or modulation monitor) lets you hear the preprocessed sound, so there will be differences.

In this case, we’re talking about audio files where you notice the peaks may be in the lower 80 percent range or even lower versus songs where the peaks are hitting 100 percent. Remember that digital audio is quite unforgiving at anything over 100 percent in the digital realm, so audio exceeding 100 percent should be avoided or else very noticeable distortion will occur, thus the reason our board ops should be watching those levels!

When “ripping” or transferring or converting audio (same thing, different names) from CDs to automation-friendly WAV files, many of the audio “ripping” software utilities allow for automatic “trimming” of both the beginning and end of the file. This is to trim embedded silence or dead air that a song might contain at the beginning and end of the track. This provides for a much “tighter cue”, like in the days of “back-cueing” a vinyl record so it started instantly when needed.

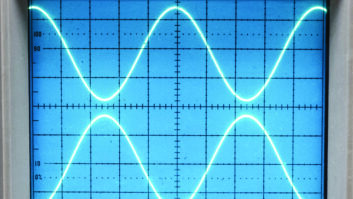

Fig. 1: Audio on the low side (peaks appear to be in the 90 percent range)

Fig. 2: Normalizing to 98 percent

Fig. 3: Final product

The other common option in ripping software is to “normalize” the audio. To normalize is not the same as compress or expand, nor is it to apply an AGC (automatic gain control). “Normalize” is to take the file and give it a reference peak that is standard across all your audio files. I tend to rip the files to a normal high peak of 98 percent (as opposed to 100 percent peak). When you “normalize” your audio, you are saying that no matter whatever the high point is in the track, whether 85 percent or 100 percent, you want that peak to be 98 percent. This means all audio is adjusted one single time across the entire file so that “loud” is 98 percent.

In Fig. 1, there is a tune that looks to have peaks around 90 percent. In Fig. 2, I have chosen to normalize the file at 98 percent. Fig. 3 shows the resulting waveform.

It should be noted that when a file is normalized, everything else is adjusted up or down by the same amount. So if the peak had been 88 percent and the volume of the peak brought up by 10 percent, that means the lowest audio and middle audio was increased by the same 10 percent. The differences that existed in dynamics are still maintained. Doing this overall and equally to the song doesn’t change the dynamics, it just makes sure that the loudness of the loudest part is consistent between all your audio files.

We as audio engineers really want to maintain the integrity of the artist’s work (including the producer and composer), so we generally minimize any changes to the audio content on a piece-by-piece basis. If we have old source material that needs to be cleaned up, we can do that (say, from an old tape recording with hiss or an LP with pops), but generally we don’t change the dynamics on a song-by-song basis.

This is where we allow our audio processors to give the station that overall processed sound we’re aiming for — which includes bringing up quieter passages when necessary. Of course radio processing, if done to extremes, can certainly change the “sound” of the song or piece from how the composer or artist intended.

Generally, classical music stations tend to preserve the dynamics much more than pop music stations. A great example of why that might be is the “1812 Overture.” When the cannons “boom,” it’s at a significantly louder level than the orchestral build-up of the piece. With changes to the dynamics through processing, we could easily bring the level of the prelude up to the same level as the cannons, but it would destroy the psychological effect of the “boom” after listening to the rest of the work left at that lower level.

So when listening to your audio files of your station, keep in mind that the levels should always be consistent. Let the dynamics of each source be addressed by processing after the output of the mixing console.