The author is sales and marketing manager at 2wcom.

Certain requirements when covering live events are increasing. Compatibility, audio quality, flexibility, simplicity and transmission robustness are the current buzzwords. Broadcasters need solutions that support studio-to-studio, studio-to-transmitter links and cross-media tasks. The latter also means production considerations for content distribution and storage include both radio and television.

GENERAL REQUIREMENTS

For mixed networks with expanded facilities that include a vocal booth, OB van, live event studio, main station studio and regional studios, high-levels of interface compatibility (even through third-party apps) and distribution technologies are mandatory.

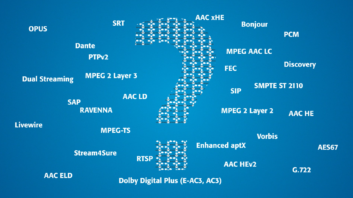

It is thus essential that all major internet interoperability protocols are supported. These include Livewire+ for studio environments, Dante for smaller broadcast networks (studios, concert halls or theaters), Ravenna for distribution in large audio networks and SRT to provide content for both radio and television.

Standards like EBU N/ACIP Tech 3326, AES67, SMPTE ST 2110 and NMOS harmonize data exchange between the protocols. Keeping in mind that the main challenge is operating mixed networks, protocol transformation, for example from Livewire to Ravenna, eases an operator’s task.

[Read: NRJ België Goes Full AoIP]

Protocols such as HTTPS, TCP/IP (Icecast for internet radio streams), ICMP, DHCP, Discovery, Bonjour, IGMPv2, IGMPv3, SFTP and UDP let users control data transmission and communication between single points. They also ensure content accessibility via the station’s website or media archive.

WHICH AUDIO CODEC?

For real-time applications, such as audio description or mobile target groups (truck or taxi drivers), high audio quality and low latency are extremely important. This is especially true for popular events like the FIFA World Cup. For this purpose, codec algorithms such as PCM, E-aptX or Opus should be supported.

When uploading files to websites or media archives, transcoding from the above mentioned high-quality codecs to more economical ones should also be possible (e.g. all AAC profiles like AAC xHE, Ogg Vorbis and all common MPEG layers).

With cross-media applications in mind, transcribing the audio in combination with an image generated from the produced video ensures the smart display of broadcast content on a station’s website. Both, transcoding and transcribing enable radio stations to subsequently provide broadcast content to the audience and to store the content for future reuse.

For events, the flexible management of recorded audio content and sound backgrounds is made possible by combining individual audio streams into multichannel streams. This can include audio from the stage, sideline or grandstand, or even speaker comments. In local studios it’s necessary to exchange regional content and ancillary data as well as broadcasting them in parallel to the main stream. To ensure the precisely synchronized routing of different streams between linked networks and good latency management, Precision Time Protocol (PTPv2) or 1pps should be supported.

TEAM SUPPORT

In addition, to guarantee optimal broadcast coverage of an event it’s important to assist the teams on-site and to guarantee flexibility in daily operation. And, if more temporary channels are needed, corresponding channel activation scalability is necessary. Preconfiguration of hardware and software for operation, management and control should be possible via an easy-to-use web interface and remotely via SNMP, Ember+ or JSON. A SIP phonebook simplifies the process by providing an uncomplicated connection between the individual studios and automatic negotiation of codecs and protocols.

SIP entries and status information accessible via a web interface and with the hardware grant flexibility to reporters in the field if WLAN is not available. To increase the independence of production teams, it’s important to support DHCP. This permits the automatic allocation of IP addresses, helping to avoid the need to request an IP address from the Help Desk.

ENSURING DISTRIBUTION

A multilayer concept ensures transmission robustness. Hardware devices should be equipped with at least two power supplies (optionally hot-swappable). Protection against IP packet loss offers Pro-MPEG FEC or Reliable User Datagram Protocol, which is more effective and more economical in means of bandwidth (Note: RUDP needs a duplex IP link and unicast/multiple unicast).

Further redundancy can be achieved by transmitting two streams or different audio qualities in parallel and in case of interruption of the main stream the respective receivers switch seamless to the second one..

Finally, it’s impossible to ignore the advantages of IP-based networks but for the sake of harmonization between old and new transmission technologies, hybrid solutions are mandatory.