IP Audio Begins Interoperability Journey

Dec 1, 2013 7:56 AM, By Doug Irwin, CPBE DRB AMD

The Audio Engineering Society has developed a new standard regarding the interoperability of audio over IP (AoIP) systems now known as AES67. The new standard is the result of the work of the AES-X192 task group, which finished its work in April 2013. After being ratified by the AES Standards due process, it was published on Sept. 11, 2013.

You’ll hear more about this standard as time goes by. But what is AES67, and how can it benefit a radio station? To start, let’s use the AES67-2013 standard itself for the definition of its scope:

“This standard defines an interoperability mode for transport of high-performance audio over networks based on the Internet Protocol. For the purposes of the standard, high-performance audio refers to audio with full bandwidth and low noise. These requirements imply linear PCM coding with a sampling frequency of 44.1kHz and higher and resolution of 16 bits and higher. High performance also implies a low-latency capability compatible with live sound applications. The standard considers latency performance of 10 milliseconds or less.”

The AES67-2013 standard also points out that the current AoIP systems are not interoperable, despite the fact that they all have a common basis in IP. AES67 is simply a means to give them a way to talk to one another, using existing protocols; no new protocols have been developed in this process. The AES expects this standard to be useful in fixed and touring live-sound applications, as well as music production and post-production, and, of course, broadcasting.

Synchronization

Many of us have used AoIP systems in which timing was irrelevant; Streaming is the most obvious one. When using UDP/IP (the best effort transport) it doesn’t matter to us when audio is heard on the far end. Using audio over IP for remote broadcasts is yet another example; usually we would just accept and work with whatever the delay was between points A and B. It’s out of our control, generally speaking, and not a problem unless it gets exceptionally long anyway.

However, it’s not hard to imagine if you work in live sound how important timing would be. One could not allow timing to change for front-of-house or stage applications. Using AoIP in that application would demand fixed time delays. This seems to me, after reading AES67, to be one of the most important aspects of the new standard. From the AES67-2013 standard:

“The ability for network participants to share an accurate common clock distinguishes high-performance media streaming from its lower-performance brethren such as Internet radio and IP telephony. Using a common clock, receivers anywhere on the network can synchronize their playback with one another. A common clock allows for a fixed and determinable latency between sender and receiver. A common clock assures that all streams are sampled and presented at exactly the same rate. Streams running at the same rate may be readily combined in receivers. This property is critical for efficient implementation of networked audio devices such as digital mixing consoles.”

Let me give you a hypothetical application in broadcasting though. Let’s say you use AoIP for your main STL for an FM station; and let’s also say you want to build an on-channel booster for that same FM station. In this application you’ll want to maintain a fixed (but configurable) delay between the two; but you’re also using IP for your connection to the booster site. How would you ensure the time delay remains constant? AES67 might provide the answer: It uses IEEE 1588-2008, otherwise known as Precision Time Protocol. PTP basically works like this:

� A grand-master clock lives on the network, and it receives its time data via GPS

� Time-stamped synchronization messages are sent out on the network, and received at each end point (slave), where the time is read.

� The slave can (optionally) send a delay request message back to the grand master

� The grand master in turn sends the delay-request message back to the slave that requested it, with an updated time stamp

� The slave receives the message, takes the time difference between when it sent the message, and when it received it, and divides it in two. The calculated propagation delay is then added to the clock at the end point.

So what’s particularly neat about this is that not only do you synchronize the clocks at the end points, but you also continually measure the propagation delay, and corrections are made as necessary.

– continued on page 2

IP Audio Begins Interoperability Journey

Dec 1, 2013 7:56 AM, By Doug Irwin, CPBE DRB AMD

Media Clock

Closely related to the network time that is continually adjusted by way of PTP, is the function of the media clock. On the input side of the AoIP system, the media clock sets the sampling rate for the ADC at one of the standard’s supported frequencies, 44.1-, 48- or 96kHz. Likewise, on the output side, the media clock is used by the DAC. The media clock has a fixed relationship to the network (grand-master) clock; for example, the media clock used for an ADC at a 48kHz sampling rate will advance 48,000 samples for each elapsed second on the network clock.

Transport

In the AES67 standard, media packets are transported using IP version 4, though care has been taken in its design so that the standard can facilitate IPv6 at a later date.

All participating devices must support IGMPv2 and optionally IGMPv3. IGMP (Internet Group Management Protocol) will be used for devices requesting multicasts, such as the PTP messages, media streams, and discovery (more on that a little later).

Propagation time through a network must necessarily be low for media packets that comply with AES67. For this reason, quality of service (QoS) becomes a necessary feature in the network. All devices will use the DiffServ method, meaning that the DSCP (Differentiated Services Code Point) field in the header of each media packet will be marked according to their traffic class, allowing the network to recognize the need of said packets for preferential treatment. All devices will tag outgoing packets with their appropriate DSCP values.

Encoding and Streaming

Several audio payload formats are supported in AES67: L16 (16-bit linear) and L24 (24-bit linear). All devices must support a 48kHz sample rate, and should support 44.1- and 96kHz sample rates.

Multicasting of streaming data is an efficient means by which audio information can be distributed on a one-to-many basis. Multicasting is also an important component in connection management (described later). All receivers must be able to receive multicast and unicast streams. In AES67 only one device will send to a multicast destination. The destination address for a particular stream is configured in the management interface of the sending device.

Discovery

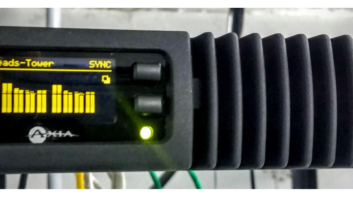

Discovery is used by participants on the network to build a list of other participants or sessions available on the network. The list is then available to end-users to facilitate connection management. Connection management requires a Session Initiation Protocol URI (uniform resource identifier) or a Session Description Protocol description. Although no discovery service is mandated by this standard, these can be delivered via one of a number of means, including: Bonjour (for Apple); SAP version 2; Axia Discovery Protocol; or Wheatstone’s Wheatnet IP discovery protocol.

Session Description

Sessions descriptions are used by discovery, and in connection management (described a little later in this article). Session descriptions specify critical information about each stream including network addressing, encoding format and origination information. Interoperability imposes additional SDP requirements and recommendations for the following aspects:

� Packet time

� Clock source

� RTP clock and media clock offset

� Payload type

– continued on page 3

IP Audio Begins Interoperability Journey

Dec 1, 2013 7:56 AM, By Doug Irwin, CPBE DRB AMD

Connection Management

Connection management is the means by which media streams are established between a sender and one or more receivers.

Connection management for all unicast streams shall be done via Session Initiation Protocol (SIP). All receivers must support unicast streams, and all receivers must support SIP. All devices are SIP user agents with an associated SIP URI (Uniform Resource Identifier). URIs are learned through discovery or by other means such as static configuration.

Typically, SIP is used with the assistance and participation of SIP servers, and different types of servers perform different tasks on an SIP network. They can be located anywhere on the network that is reachable by other participants. However, there is also a server-less mode that can be used to perform connection management between user-agents in a direct peer-to-peer fashion. This mode is used in smaller systems where the SIP servers would provide minimal benefits. In order for this to work, there must be a means by which the caller can learn of the network information (i.e., IP address) of the callee. This can be done through discovery, manual configuration, or higher-layer protocols. In the server-less mode all SIP messages must be sent directly to the targeted agent as opposed to a SIP server, and an agent operating in this mode must respond to such requests.

Multicast connections may be established without the use of a connection management protocol. A receiver is not required to make a direction connection with the sender. Instead, a receiver obtains a session description of the desired connection using discovery, and then uses IGMP to inform the network of its desire to receive the stream in question.

Going forward

After reaching this point in the article, you may have one of two questions: either you want to know if AES67 will affect your current system in some fashion; or alternatively, if it will affect future purchase decisions.

According to its website, Axia and the other companies in the Telos Alliance have been involved with AES-X192 since its inception. I communicated directly to get their thoughts on AES67. “We finally have a standard for AoIP audio transport in AES67 – and that’s great!” said Marty Sacks, VP of Axia Audio. “Ultimately, the goal is for every studio audio device to click together with CAT-5 and share audio. But along with that shared audio, there’s a whole world of other functionality that broadcasters expect, like device start/stop functions, monitor mutes, on-air tallies, the ability to control peripherals from the console, the ability to know when an audio source is live and ready for air, the ability for playout systems to control fader on/off functions and more. Those are functions that AES67 alone doesn’t provide for. AES67 is a great start toward a unified standard, but when the first AES67 devices hit the marketplace, they will also need to support this additional functionality, no matter whose system they use, in order to provide an integrated control experience for the user – otherwise they’re going to be no better than AES3 streams, with serial GPI cables running alongside.”

I also communicated directly with Wheatstone for its thoughts regarding AES67. “Wheatstone was very involved in the AES-X192 group that developed the new AES67 standard, which gives us that very important first step of transport interoperability,” said Andrew Calvanese, Wheatstone VP of engineering. “We are evaluating our WheatNet-IP system so that our customers can take advantage of AES67 but with an eye on a much wider goal of total interoperability that includes discovery and control. AES67 is the first step in what we hope will lead to a totally interoperable environment that includes not only transport, but the means to control and discover all devices and functions within the network. We are working with other engineering teams to standardize on the control and discovery protocols that can make that happen at an overall, interoperable level.”

Logitek intends to be compliant with the AES67 standard by spring of 2014; It will make the necessary development work this winter and plan to have that project completed by April.

To reiterate, AES67 is simply a means to give (formerly) disparate AoIP systems a way to talk to one another, using existing protocols; no new protocols have been developed in this process. It’s too early to tell what impact AES67 will have in broadcasting, but the adoption of standards in technology usually provides benefits down the road.

Irwin is RF engineer/project manager for Clear Channel Los Angeles. Contact him at [email protected].

December 2013

Interoperability standards for IP audio, a Seacrest Studio opens in Cincinnati, the FCC catches up on work, insight to HD Voice, and a rundown of portable recorders….