iHeartMedia’s plan to use Veritone’s voice-cloning technology for its podcast platform has some radio industry observers asking the obvious questions: How good does it sound and is broadcast radio far behind?

The largest radio company in the United States says that for now, the synthetic voice solution will only be used to translate podcasts from English to other languages for use on the iHeartPodcast Network, first for Spanish-speaking audiences. But Veritone officials confirm its technology could someday be used for advertising to reduce time-to-market and production costs for radio.

One veteran broadcast engineer said Veritone’s voice cloning product is exactly the sort of tech breakthrough that media are quickly adopting as the industry embraces cost-saving measures, and could at the very least bring a more centralized approach to commercial production and staffing by leveraging artificial intelligence.

There are literally dozens of examples of text-to-speech apps available commercially, many of which can convert text into human-like speech, even if it is still might be a bit robotic. Observers familiar with this technology say some of the services on news websites are becoming good enough to be “almost indiscernible from real human voices.”

But what Veritone and iHeartMedia are trotting out appears to be an effort to take synthetic speech and voice cloning to another level, according to those familiar with the AI platform.

Veritone says “hyper-realistic custom voice clones” will offer increased revenue streams for branded synthetic voices of top talent — imagine the cloned voice of Ryan Seacrest someday pitching for the local hardware store.

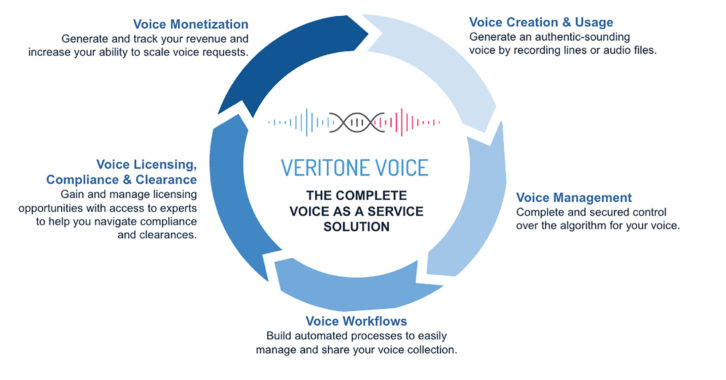

Veritone launched Veritone Voice in 2021, which has the ability to “control and manage the entire voice creation lifecycle for efficiency and scale.” The company says its synthetic voice solution will afford iHeartMedia the opportunity to reach new audiences at scale with their current top podcast talent.

“With no additional studio time, voice talent can authorize Veritone’s synthetic voice solution to automatically produce more podcasts, advertisements and additional audio in multiple languages with the same energy, cadence and uniqueness of top talent,” the technology company says.

Veritone, which reported its first quarterly profit in Q4 2021, says its cloning software ingests several hours of audio that uses AI to train the system on how to produce the synthetic voice. “The more audio, the more life-like the results,” its executives say (see sidebar story below).

CEO Chad Steelberg said on an earnings call this year that in addition to the iHeart agreement, the AI company is inking deals in a number of voice markets beyond podcasting like audio books, production studios, audio advertising and digital influencers.

Veritone has been aggressively pursuing tech startups specializing in synthetic voice development, according to its press releases. Its recent acquisition of VocaliD will “further enhance the company’s existing synthetic voice offerings for commercial enterprise including brands, podcasters, broadcasters, studios, publishers and corporations,” according to Veritone.

Conal Byrne, chief executive officer at iHeartMedia Digital Audio Group, says the media company is in the “test phase” of using voice cloning to translate podcasts from English to multiple other languages.

The audio company says it plans to use Veritone’s technology to allow celebrities, athletes, influencers, broadcasters, podcasters and other talent to create and monetize synthetic voices that can be transformed into different languages, dialects and accents for its podcast network.

“This is really about audience expansion,” Byrne said. “The artificial intelligence that very smart people are working on to power voice technology is progressing a mile a minute. And in a lot of ways it is going to explode open the audience of podcast creators and broadcast radio influencers. The more we look into voice technology, the more we see it accelerating audience growth.”

Byrne says iHeart will take extra care to be “careful and respectful” of creators’ voices. “Nothing will happen without the creators’ approval, input and collaboration, as it should be with any new technology.”

“Anything you can read”

Text-to-speech technology is now to the point that anything that is text-based is convertible or will be convertible, Byrne said.

“Anything you can read is now convertible into audio. That interests us since we are an audio company. That brings us a lot of really interesting opportunities when it comes to people’s audio journey. The world is going to audio.”

It is not clear yet whether iHeart’s podcast platform will disclose to audiences in advance of programming that synthetic voice is in use.

“It’s too early yet to address disclosure and exposure. Much of it will depend on when we discover how audiences really feel about it,” he said.

Byrne says iHeartMedia hopes voice AI eventually will allow it to customize advertising on the iHeart Podcast Network to individual markets.

“If I capture enough of the creator’s voice with the cloning technology, through customization and tailoring, we can create advertising to local markets the way we can’t right now.”

[Sign Up for Radio World’s SmartBrief Newsletter]

And it’s that ability for audio companies to manipulate audio via synthetic voice that excites iHeart and advertisers.

“It’s a whole other thing to be able to tailor those ads to the 158 markets that iHeart trades in and has local boots on the ground selling. Thanks to this new technology advertisers won’t be limited to national campaigns. Perhaps now they will want to customize ads for 12 or 13 geo territories or cities they want to market in,” he said.

“As an audio company — whose stock in trade is to give creators the opportunity to gain as much audience as they can and to give brands access to those audiences in smart and integrated ways — it hard not get excited about.”

Byrne said he can confirm iHeart has yet to begin testing “same-language synthetic voice tailoring or customization” but added the audio company has “certainly thought a lot about it.”

“Advertising customization using voice technology” for podcast and broadcast is going to be the logical next step, he said. “But we have no timeline to move forward with it.”

Coming to market

It’s not much of a stretch to think that voice cloning technology will begin leaking into broadcast, says one observer who is intrigued by its possible introduction to United States radio.

“Some have voiced concerned as this tech jumps to America,” said Gary Kline, former director of engineering at Cumulus and now a radio engineering consultant.

“The next thing we know, we have cloned voices doing middays. Will that eventually happen? Yes. I do think so. At first, I think we will see AI used for commercial production. In fact, I’ve already seen and heard examples of this in Europe.”

Kline notes that synthetic voice is already being used for small-market radio weather reads via Westwood One’s partnership with the Weatherology service. It provides test-to-voice localized weather forecasts customized for radio station clients.

From the Westwood One website: “The extraordinary text-to-voice Weatherology system delivers forecasts directly to your local automation system for play in the next scheduled weathercast position, or in the case of severe weather alerts — as the next event — depending on how you choose your custom setup.”

And the National Weather Service has utilized TTS computerized voices to read weather watches and warnings across the country for several decades, even though it is often been criticized for mispronunciations. NWS introduced a new voice in 2016, nicknamed Paul.

One industry engineering observer told Radio World he has grown weary of the NWS voice software that is “frequently off with its inflection and typically lacks any human emotion.”

He continued: “Imagine having a naturally spoken synthetic voice consistently and clearly delivering alerts rather than the crude text-to-speech mechanisms or poorly relayed recordings we currently have. Weather forecasts and alerts would be a related application.”

Radio World will continue to watch developments in synthetic voice instruments, which use AI as the heart of their algorithmic engine.

Let’s hear your voice. Comment on this or any story. Email [email protected].

More on Veritone’s synthetic voice AI

We asked Sean King, senior vice president and general manager of commercial enterprise at Veritone, to explain the AI behind the company’s synthetic voice technology and just what it might be capable of some day.

Radio World: What kind of deep learning artificial intelligence is involved to create synthetic and cloned voices?

Sean King: For synthetic AI-generated human voice, at a basic level, there are two ways you can break this down.

First, stock or generic voices that sound that of a real human but are not replicating an individual voice.

And secondly, custom voice, which is a process where an individual’s unique voice is cloned. Once all approvals are in place with the consent of the talent, a custom voice model can be created with about three hours of clean audio. From there, the voice model can generate new material with that individual’s voice from either text (text to speech) or audio (speech to speech).

It could be possible to create a completely new voice by using additional AI to combine generic AI voices and adjusting pitch, tone, and cadence, etc. Veritone has not yet been involved in any use cases of this nature.

RW: Has Veritone undertaken studies to determine whether listeners can tell the difference between real and synthetic?

King: We have not conducted formal studies here. Stock voices are getting much more realistic. In short form, such as radio liners, it can be difficult to hear the difference. In long-form, most listeners would be able to pick up on some of the AI generated nuances.

Custom voices using voice cloning, on the other hand, can be nearly indistinguishable.

RW: Do audio services run the risk of eroding that intimacy and trust, an inherent radio value, by deploying synthetic cloned voices?

King: With a custom voice, the voice model includes the talent’s — in this case the podcast host’s — natural cadence and uniqueness of their voice. It is truly their voice. And in fact, with Veritone, they own their voice clone.

With the ability to localize their voice and release their show in multiple languages, they could actually form an intimate relationship with new audiences they couldn’t otherwise, such as the Latinx market.

[Check Out More Products at Radio World’s Products Section]

RW: Are there any moral and ethical implications of using synthetic voice technology? Should there be a requirement that the listener is informed they are not listening to a human voice on iHeart podcasts?

King: It’s up to the talent and company what, if any, disclaimers are used. Again, there are scenarios when it’s a best practice to give a disclaimer. On the technology side, Veritone creates an inaudible watermark on all voice recordings for further verification.

RW: What do you see as the next evolution or phase in AI and cloned vocals?

King: Synthetic voice combined with avatar technology (3D human clones) to create more humanized conversational AI for uses such as customer services, sales, recruiting, patient care and more.

RW: Veritone says in a press release that the technology can be used for advertising to cut production costs for broadcast radio. Is it Veritone’s hope to have iHeart use it for such, or are you pitching the technology to other radio broadcast groups?

King: Veritone is in conversations with several major radio conglomerates, globally and numerous stations.

RW: And do you foresee a time when your synth voice technology could be used to fill on-air shifts at radio stations?

King: Synthetic voice could one day certainly be used for liners, sweepers, stating imaging and commercials. And on-air talent can let their voice clone do some work for them while they handle other priorities especially if they need to travel, have a family emergency, or if their voice is compromised from an illness.

Veritone does not wish to replace humans, ever; our goal is to help take the burden off when possible while creating efficiencies to increase productivity.